Compute Flows to Where It's Treated Best

AI compute deserves better

By Dr. Steven Waterhouse, Nazaré Ventures

The End of AI Abundance

The illusion of infinite compute powered by global trade and just-in-time logistics is breaking down because the systems, assumptions, and institutions that shaped globalization over the past eight decades are no longer intact. In the coming years, intelligence will shift to cost-aware, constraint-shaped systems – a movement we call MACHA (Make AI Cheap Again).

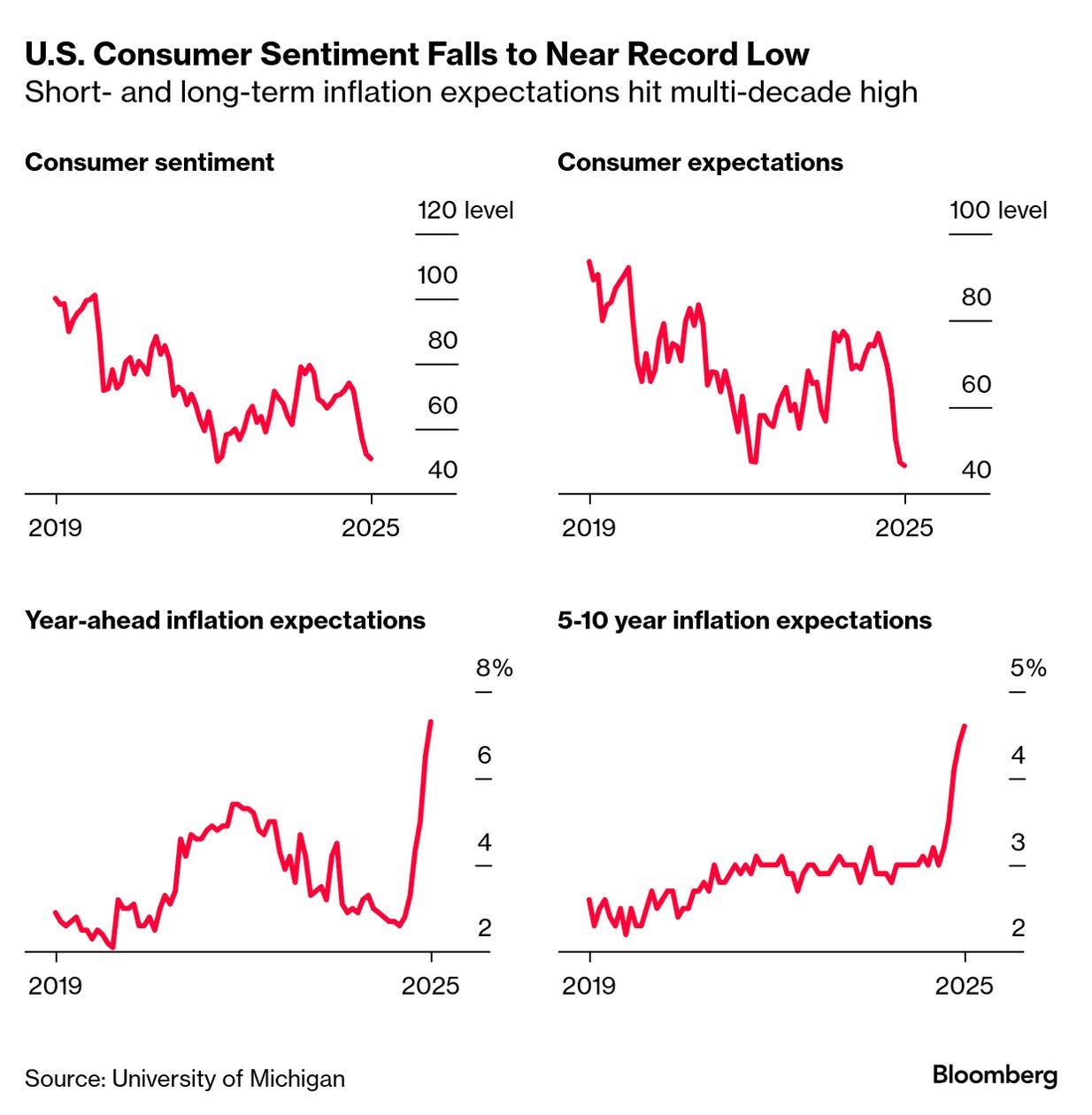

Though global trade and markets have rebounded since Liberation Day, the US has lost its final perfect credit score, supply chains are stressed, trade relations are being rewritten, and technological infrastructure (once open and borderless) is now a matter of national security. Sitting squarely at the center of this upheaval stands AI.

Much attention is paid to growth forecasts, inflation, and consumer sentiment, but the deeper story is how artificial intelligence will evolve in a world that is less integrated, more volatile, and increasingly shaped by economic, industrial, and fiscal policy.

No longer a neutral force of innovation, AI has become an instrument of national power, a pressure point in global trade, and a fault line in this brave new world. Tariffs introduce not only cost and complexity to the flow of goods, but uncertainty into the lifeblood of AI development: compute, data, and supply chain coordination.

AI as Geopolitical Infrastructure

Governments now treat AI infrastructure as a sovereign-grade asset. Semiconductors, model weights, inference hardware, training data – these are no longer the concern of technologists alone. They are matters of national capability and geopolitical positioning. Regulatory fragmentation, export controls, and strategic subsidies are to be treated as the new baseline, not as exceptions or edge cases.

This new set of restrictions – tariff-driven uncertainty, constrained supply chains, and politicized access to infrastructure – is forcing a re-evaluation of how AI is built and deployed. The focus is shifting from maximalism to sufficiency, and from abstraction to localism. Importantly, this is both an economic and a philosophical adjustment.

In this new environment, compute flows to where it’s treated best. That means environments where regulatory posture is predictable, infrastructure is reliable, and policy supports experimentation (as opposed to stifling it). Jurisdictions that once competed on cost or capital are now competing on stability and sovereignty. Developers, startups, and enterprises alike are increasingly factoring these variables into their deployment strategies.

This realignment mirrors broader economic patterns. The IMF projects that global growth will slow to 2.8 percent this year, with inflation nudging higher due to trade frictions. Global trade volumes are now expected to grow more slowly than output. For AI, this introduces second- and third-order effects: delayed hardware shipments, reduced investment in frontier-scale systems, and greater hesitancy among firms exploring automation at scale.

Strategic Intelligence Infrastructure

Counterintuitively, these constraints create a powerful force for acceleration. The pressure to "do more with less" is a requirement now, not a choice. More than a simple concession to scarcity, the era of cost-efficient AI is the beginning of a more grounded and sustainable phase of deployment. Organizations that once needed hyperscaler partnerships and enormous budgets to integrate AI are being forced to discover that they can achieve meaningful outcomes with smaller, more targeted systems – especially when those systems are optimized for their environment or application.

We are seeing the rise of "strategic intelligence infrastructure:" systems designed not just for performance, but for flexibility, resilience, and regulatory uncertainty. Open-source models like Meta’s Llama, Mistral AI, and Stability AI’s Stable Diffusion are gaining traction because they are inexpensive and offer both control and transparency (to varying degrees).

Smaller models optimized for specific use cases are becoming more attractive than massive, general-purpose systems. Decentralized compute networks, long relegated to academic interest or crypto-native experimentation, are now under serious consideration as alternatives to hyperscale dependence because they can be significantly cheaper and more resilient.

We call this dynamic MACHA (Make AI Cheap Again). Good-enough compute, strategically deployed, is a feature, not a flaw. As such, the real frontier is deployment agility, not model size. Intelligence must now operate across a continuum: from data centers to edge devices, from specialized agents to multi-purpose assistants. The winning infrastructures – contrary to popular belief – will not be the ones that are most vertically integrated but those that are most effectively distributed.

The MACHA “Future Stack”

Making AI Cheap Again would only be possible with a robust toolkit. Thankfully a “future” stack exists, upon which the next wave of AI will get built.

Distributed Architectures & Democratized Hardware: Forward-thinking developers are exploring alternatives to premium GPUs, incorporating consumer-grade hardware, ARM processors, RISC-V architectures, and application-specific integrated circuits into AI workflows.

Open Source Frameworks: Tools like VLLM Project, MLC, and Hugging Face’s ecosystem have dramatically lowered entry barriers while creating sustainable business models through enterprise support services.

Low-Cost Cloud Alternatives: Specialized providers such as Vast AI are challenging established players by offering substantial cost advantages for training and inference, including by unlocking idle compute through peer-to-peer marketplaces.

Training and Inference Outside of Mega Clusters: Companies like Prime Intellect and Nous Research are enabling training and inference outside of traditional mega clusters using distributed GPUs and open-source models, pushing the limits of what’s possible.

Local Intelligence on the Edge: Apple’s Neural Engine exemplifies a tiered intelligence model where sensitive, real-time tasks are processed locally on-device, while complex computations are selectively offloaded to the cloud. It enhances speed, efficiency, and privacy by keeping data close to the user and reserving cloud resources for only what’s necessary.

The Continuum of Compute

As noted briefly above, the future of computation is a continuum from massive GPU mega clusters to billions of distributed edge devices in homes, factories, and pockets worldwide. The development of software enabling AI-powered applications to be built and operated across this continuum is an enormous opportunity.

Distributing computation across contributor networks significantly reduces costs. When compute is coordinated, inference that once required a centralized cluster can now be distributed across idle devices or crowd-sourced infrastructure. These systems reduce overhead, expand geographic reach, and enable better pricing. Intelligence delivered peer-to-peer (or trained collaboratively across a mesh of participants) costs less and scales naturally with demand.

Policymakers have a role to play in shaping this future. Trade policy must be clarified, especially around digital goods and compute infrastructure. Export regimes must be modernized to reflect the realities of AI development, which is iterative, cross-border, and data-driven. Nations must balance the desire for control with the need for collaboration, particularly on foundational safety and interoperability standards.

In the meantime, builders will continue to adapt. We are entering an era of architectural pluralism. There will be no single dominant AI stack. Instead, we will see overlapping systems that optimize for different constraints – cost, latency, privacy, sovereignty. Success will belong to those who can compose and coordinate across these domains.

Looking Forward

The decoupling of global systems may be unwelcome, but it’s underway. The rules of the old economy were written for a world of integration, scale, and trust. The new rules are being drafted in an environment of volatility, divergence, and strategic hedging. Artificial intelligence is both shaped by and shaping this moment, and it’s not immune to geopolitics. For better or for worse, it appears inextricably entangled with it.

The future will be defined by who can deliver intelligence where it’s needed most, under constraints that reflect the world as it is, not as it was. In that sense, AI will be a tool for economic productivity and a test of institutional foresight, technological realism, and geopolitical imagination.

What’s clear is this: compute will continue to flow, but it will flow in new directions, driven by new forces. Those with the largest models or the most GPUs may not win, and infinite compute may not be the moat that hyperscalers were hoping for.

Success in AI will hinge on strategic deployment, geopolitical awareness, and the ability to build systems designed for a fragmented, adversarial, and constraint-driven world.

Dr. Steven Waterhouse (PhD AI from Cambridge University) is the Founder and General Partner of Nazaré Ventures, an early-stage AI venture fund.